Text2FX - Harnessing CLAP Embeddings for Text-Guided Audio Effects

Annie Chu, Patrick O'Reilly, Julia Barnett, Bryan Pardo

This work supported by NSF Award Number 2222369

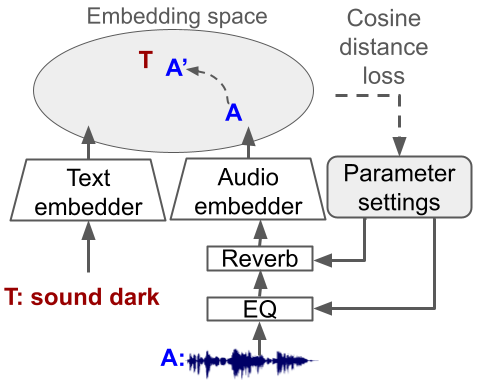

Text2FX leverages CLAP embeddings and differentiable digital signal processing to control audio effects, such as equalization and reverberation, using open-vocabulary natural language prompts (e.g., “make this sound in-your-face and bold”).

Text2FX operates without retraining any models, relying instead on single-instance optimization within the existing embedding space, thus enabling a flexible, scalable approach to open-vocabulary sound transformations through interpretable and disentangled FX manipulation. While we demonstrate with CLAP, this approach is applicable to any shared text-audio embedding space. Similarly, while we demonstrate with equalization and reverberation, any differentiable audio effect may be controlled.

Audio GitHub

Related publications

pdf A. Chu, P. O’Reilly, J. Barnett, and B. Pardo, “Text2FX: Harnessing CLAP Embeddings for Text-Guided Audio Effects,” in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2025.